Statistics [23]: Monte Carlo

Published:

Monte Carlo (MC) technique is a numerical method that makes use of random numbers to solve mathematical problems for which an analytical solution is not known.

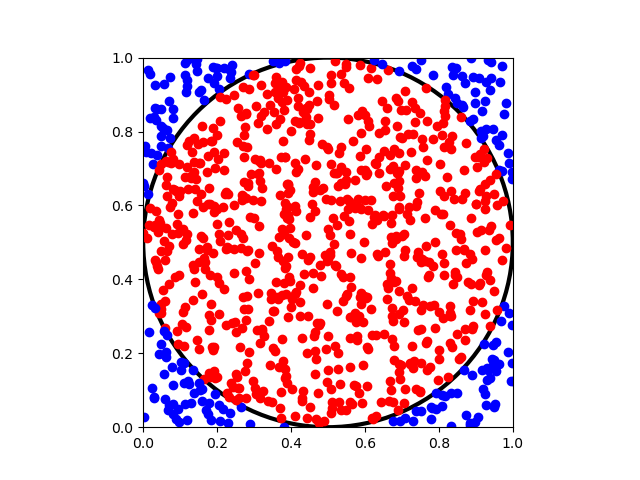

A Simple Example: Estimating Pi

Assume we have a circle with radius

inscribed within a square

. The area ratio would be

To determine , we can randomly select

points in the square. Suppose

points fall within the circle, we can then approximate the ratio by

Hence,

Here is the Python code.

import numpy as np

import random

import math

import matplotlib.pyplot as plt

inCircle = 0

outCircle = 0

piValue = []

# number of trials

numTrial = 1

# number of points within each trial

numPoints = 1000

data = np.random.random([numPoints,2])

fig = plt.figure()

for trial in range(numTrial):

for i in range(numPoints):

if (data[i][0]-0.5)**2 + (data[i][1]-0.5)**2 <= 1/4:

plt.plot(data[i][0],data[i][1],'ro')

inCircle += 1

else:

plt.plot(data[i][0],data[i][1],'bo')

outCircle += 1

piValue.append(4*inCircle/(inCircle + outCircle))

# result

print(sum(piValue)/len(piValue))

# plot

plt.xlim(0, 1)

plt.ylim(0, 1)

ax = fig.add_subplot(1, 1, 1)

plt.gca().set_aspect('equal', adjustable='box')

circ = plt.Circle((0.5, 0.5), 0.5, color='k',fill=False, linewidth=3)

ax.add_patch(circ)

plt.show()

The Law of Large Numbers

Assume are

independent samples from the same distribution with

. Let

Then

Further assume ,

.

Weak Law of Large Numbers (Khinchin’s Law)

For and

, there is

which states that the sample average converges in probability towards the expected value.

Weak Law of Large Numbers ( Kolmogorov’s Law)

For , there is

which states that the sample average converges almost surely towards the expected value.

MC Integration

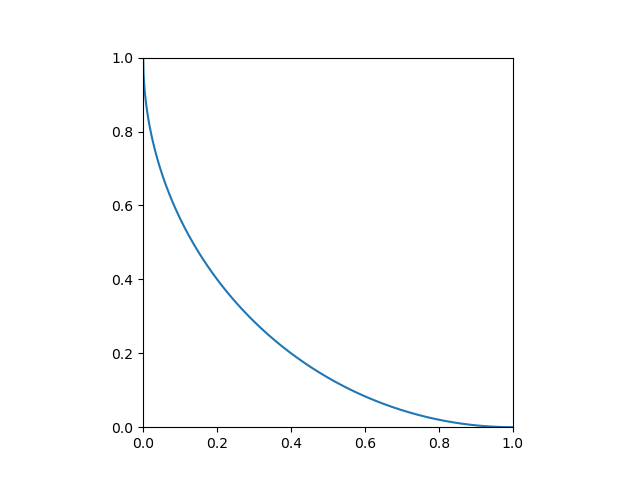

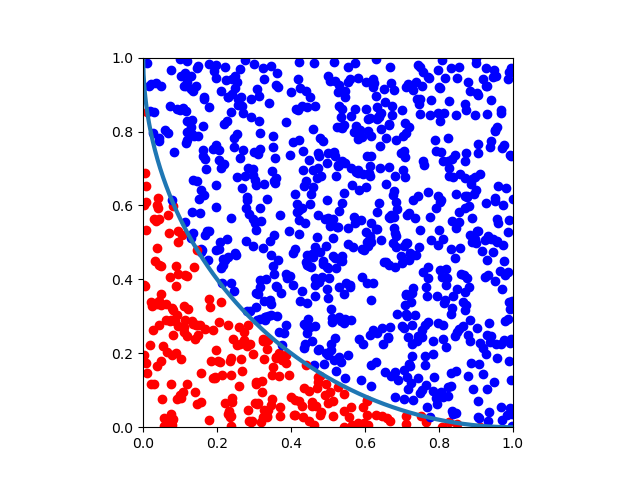

A Simple Example

Find .

Firstly, let’s plot the curve of , the area under the curve would be

.

To determine , we can randomly select

points in the square

. Suppose

points fall under the curve, we can then approximate

by

underCurve = 0

aboveCurve = 0

pValue = []

# number of trials

numTrial = 1

# number of points within each trial

numPoints = 1000

data = np.random.random([numPoints,2])

fig = plt.figure()

for trial in range(numTrial):

for i in range(numPoints):

x = data[i][0]

y = 1 - np.sqrt(x*(2-x))

if data[i][1] <= y:

plt.plot(data[i][0],data[i][1],'ro')

underCurve += 1

else:

plt.plot(data[i][0],data[i][1],'bo')

aboveCurve += 1

pValue.append(underCurve/(underCurve + aboveCurve))

# result

print(sum(pValue)/len(pValue))

x = np.linspace(0,1,1000)

y = 1 - np.sqrt(x*(2-x))

plt.plot(x,y,linewidth=3)

plt.xlim(0, 1)

plt.ylim(0, 1)

plt.gca().set_aspect('equal', adjustable='box')

plt.show()

General Case

Regular Domain of Integration

Find , where the domain of integration is

.

We can randomly select points in

, then

Hence,

Non-Regular Domain of Integration

Find .

Similarly, we can randomly select points in

that includes

. Suppose

of the

points fall within

, then

Hence,

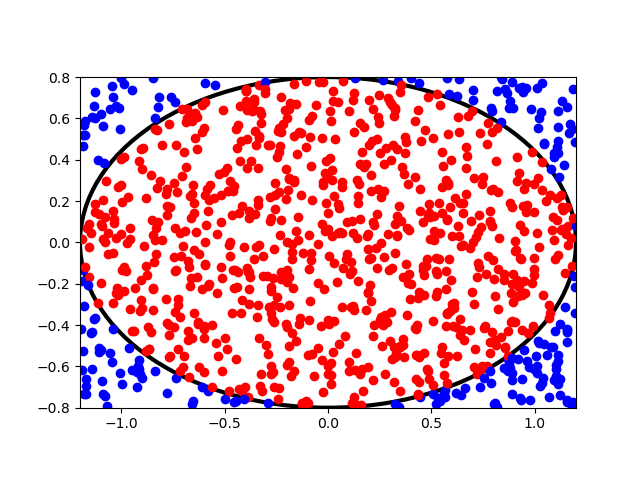

Example

In a shooting practice, assume the target is an ellipse with and the probability density function of hitting the target is

with

. Find

, where

.

#

a = 1.2

b = 0.8

sx = 0.6

sy = 0.4

result = []

# number of trials

numTrial = 1

# number of points within each trial

numPoints = 1000

fig = plt.figure()

for trial in range(numTrial):

z = 0

for i in range(numPoints):

x = np.random.random()*2*a-a

y = np.random.random()*2*b-b

if x**2/a**2 + y**2/b**2 <= 1:

plt.plot(x,y,'ro')

u = np.exp(-0.5*(x**2/sx**2 + y**2/sy**2))

z += u

else:

plt.plot(x,y,'bo')

P = 4*a*b/numPoints*z/2/pi/sx/sy

result.append(P)

# result

print(sum(result)/len(result))

# plot

mean = [0 , 0]

width = 2.4

height = 1.6

ell = mpl.patches.Ellipse(xy=mean, width=width, height=height, fill=False,linewidth=3)

ax = fig.add_subplot(1, 1, 1)

ax.add_patch(ell)

plt.xlim(-1.2, 1.2)

plt.ylim(-0.8, 0.8)

plt.gca().set_aspect('equal', adjustable='box')

plt.show()

Table of Contents

- Probability vs Statistics

- Shakespear’s New Poem

- Some Common Discrete Distributions

- Some Common Continuous Distributions

- Statistical Quantities

- Order Statistics

- Multivariate Normal Distributions

- Conditional Distributions and Expectation

- Problem Set [01] - Probabilities

- Parameter Point Estimation

- Evaluation of Point Estimation

- Parameter Interval Estimation

- Problem Set [02] - Parameter Estimation

- Parameter Hypothesis Test

- t Test

- Chi-Squared Test

- Analysis of Variance

- Summary of Statistical Tests

- Python [01] - Data Representation

- Python [02] - t Test & F Test

- Python [03] - Chi-Squared Test

- Experimental Design

- Monte Carlo

- Variance Reducing Techniques

- From Uniform to General Distributions

- Problem Set [03] - Monte Carlo

- Unitary Regression Model

- Multiple Regression Model

- Factor and Principle Component Analysis

- Clustering Analysis

- Summary

Comments